I started reading and watching presentations by Dave Mark on utility-based AI. It’s excellent stuff!

The gist of utility-based AI is that you make decision for which action to select based on the “fitness” of each action, which is calculated as a function of an arbitrary number of inputs (considerations). There is an important distinction between the value of an input, and its utility for a particular action. The value is objective (e.g. getting an ice-cream is not that important in life); the utility is subjective and context-specific (if I’m really really hungry, the ice-cream’s perceived value skyrockets). A utility score is calculated from a value using response curves (linear, quadratic, logistic, etc) of the form y= f(x) , where x,y in [0,1].

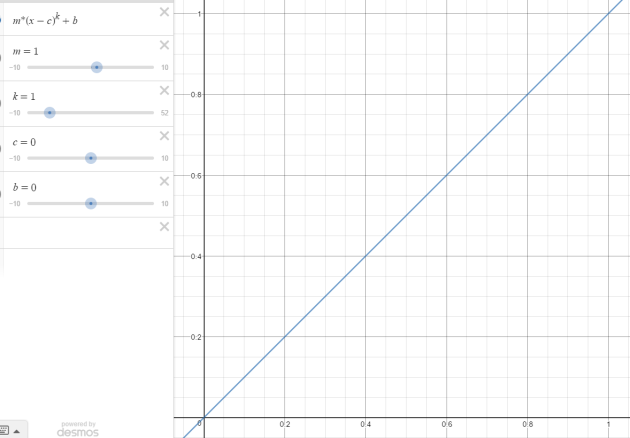

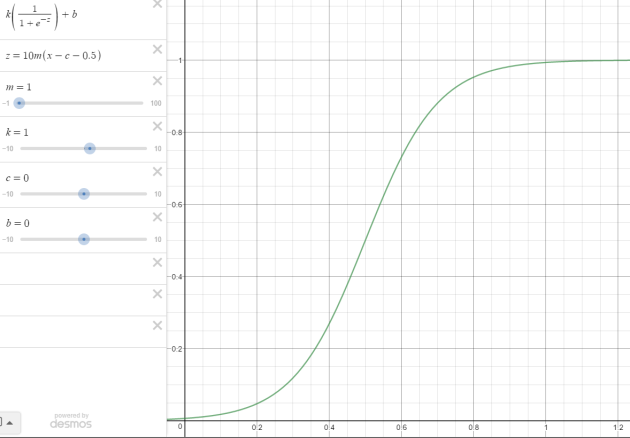

I’ve already implemented my own version (heavily borrowed, slightly adjusted, developed with the help of the wonderful Desmos) of the curves so that they are as simple to use as possible. So far, there are 3 curves:

(below images link to the corresponding Desmos graphs when clicked)

Linear/Quadratic

Logistic

Logit

and planning to add a sine and a normal distribution and that should be it for starters. In the above graphs, m and k modify the shape of the curve while b and c translate it in x/y axes.

What doesn’t make sense with the utility AI that I’ve seen so far is the combinatorial explosion that looks like it is encouraged: e.g. for an Attack decision score, it would calculate scores for Attack(Target0) Attack(Target1) for N targets. But what happens when there are lots of targets? In one presentation, number of targets is limited to 12, which is a poor approach, although practical. But what happens if actions are parameterizable in many more ways? I don’t want to create multithreaded AI and evaluations once-per-X-seconds because it takes forever.

Problem Scenario: Spellcaster NPC with a dagger, with a sword and an axe in the inventory, able to cast Frost Ray, Fire Bolt, Acid Touch and Lightning, is surrounded by 10 enemies. A melee attack can be a Power Attack, Normal or Quick Attack. This creates (10 x 3 x 3) + (10 x 4) combinations of attack actions only! Not even considering retreat or other actions. This is way too many decisions to be evaluated just for a single unit.

What some people advocate is to use utility-based AI as part of a Behavior Tree: the Selector node. That node will run the utility calculations to select the best-scoring child node . Of course that requires a behavior tree implementation, which is something that I’m planning for later. Of course, it also complicates the implementation, as we now have 2 AI systems to worry about.

A simple alternative that I’ve been thinking is to use for starters a tiered form of utility calculations, akin to a behavior tree composed only of Selector nodes.

Tier 1: Select between high-level behaviors (e.g Attack, Retreat, SelfBuff, Idle)

Tier 2: Select among commands that satisfy that behavior (e.g. MeleeAttack, RangedAttack, HeadButt, SuperKick, TornadoStrike)

Tier 3: Execute a mini-FSM that sets up the command’s parameters and executes the command. A melee attack FSM would for example select a target, and if the target was at melee range, it would schedule the attack (and the FSM completes), or if the target is not at melee range, it would schedule a move towards the target (and the FSM, again, completes). Such small FSMs are not a big fuss to implement, and allow scriptability and fast execution. The FSM should be able to reuse whatever intermediate data have been computed by the previous tiers.

A couple notes: sometimes tier 1 could directly lead to tier 3 (e.g. there are no particular “retreat” skills, besides I guess an Expeditious Retreat spell 🙂 ). Additionally, while I mentioned FSM, there no reason why that could not be a small behavior tree, or even a class that runs a few if-then-else statements. The goal of tier 3 is the same: set the parameters and schedule a command for execution.

So that’s it for now, all talk and no action. Next time hopefully with an implementation of the above tiered system.

Pingback: AI: Blackboard and Input Cache | Age of Transcendence

On the project I work on, we started with a pure Utility AI and stumbled into a lot of the same issues you note here (combinatorial explotion). The solution we came up with was to layer GOAP on top of it, where we only look at the world around us when we have found the best goal plan possible (we look at world state to create the best possible variant of a goal plan). We chose to solve our GOAP top-down rather than bottom-up, so maybe it’s more of a HTN truly, but the concepts are still strongly GOAP…

So all goals score against each other, but will only consider very cheap considerations. Within a goal every goal plan will score against each other, and can be slightly more expensive considerations. If all variants of a winning goal plan proves invalid, be fall back to the next best and continue falling back until we find a valid goal plan variant.

What I like about GOAP over Behaviour Trees is that planning remains very modular and not stuck in rigid branches of “designed” behaviour.

On other projects we have explored Apex Utility AI, which does layer a Behaviour Tree style hierarchy on top of the Utility AI, and what we are seeing there is that a lot of the time we want to compare a lot of “qualifiers” in a single “selector”, and within these “qualifiers” there are so many “scorers/considerations” with numbers to tweak, that copying them into specialized versions isn’t trivial (eg. you want a wolf to behave slightly differently from the base animal ai).

With GOAP there’s none of this really, everything remains very modular.

In our game, the best goal might be to create shelter, but that might require you to cut down a tree for lumber, which might require that you have an axe, which you might have to craft. We introduced a concept we called Desires to solve this kind of chaining, rather than “code” it into action chains. So that if you want to build that shelter, but some tools or resources to do so is missing, we create desires for those, that then boost related decisions in the planner.

Bottom line is, I don’t think there’s any one solution that can fit all the problems you want to solve with an AI. The trick is to use the strength of multiple techniques to compliment each other.

LikeLike

Thanks for all the info, it’s quite interesting! I haven’t given GOAP a good read and a try yet, mainly to avoid ovewhelming myself with options, as I need to test things out first. Plus I read somewhere that it’s not really used any more, but that opinion might have been biased and not very qualified. Utility by itself looks like it’s impractical to use, and FSMs are very … un-iterative, so that’s why I’m trying out behavior trees. So I guess GOAP isn’t dead yet, which is nice, as the concept of goal/desire driven behavior definitely sounds intriguing! Does it scale? Can you for example make super-dumb zombies that can use a rapidly evaluated AI using GOAP? I want to avoid implementing every AI technique under the sun and maintain it for the final game, so I’m trying to find a combo that a) works well b) is scalable/tweakable in terms of intelligence/performance tradeoff and c) results in emergent variations which for me are crucial for an RPG/roguelike game experience.

I haven’t run into the Utility AI complexity that you describe yet, but it will crop up eventually, and that’s why I want to test it. Regarding the rigidity of behavior trees, I was hoping that the Utility AI selectors would break that. Still, I haven’t gotten around to *real* AI complexity, so my assumptions are based on concrete toy examples and (limited) imagination of future complexity.

LikeLike